Tweets

Replying to @josecastillo

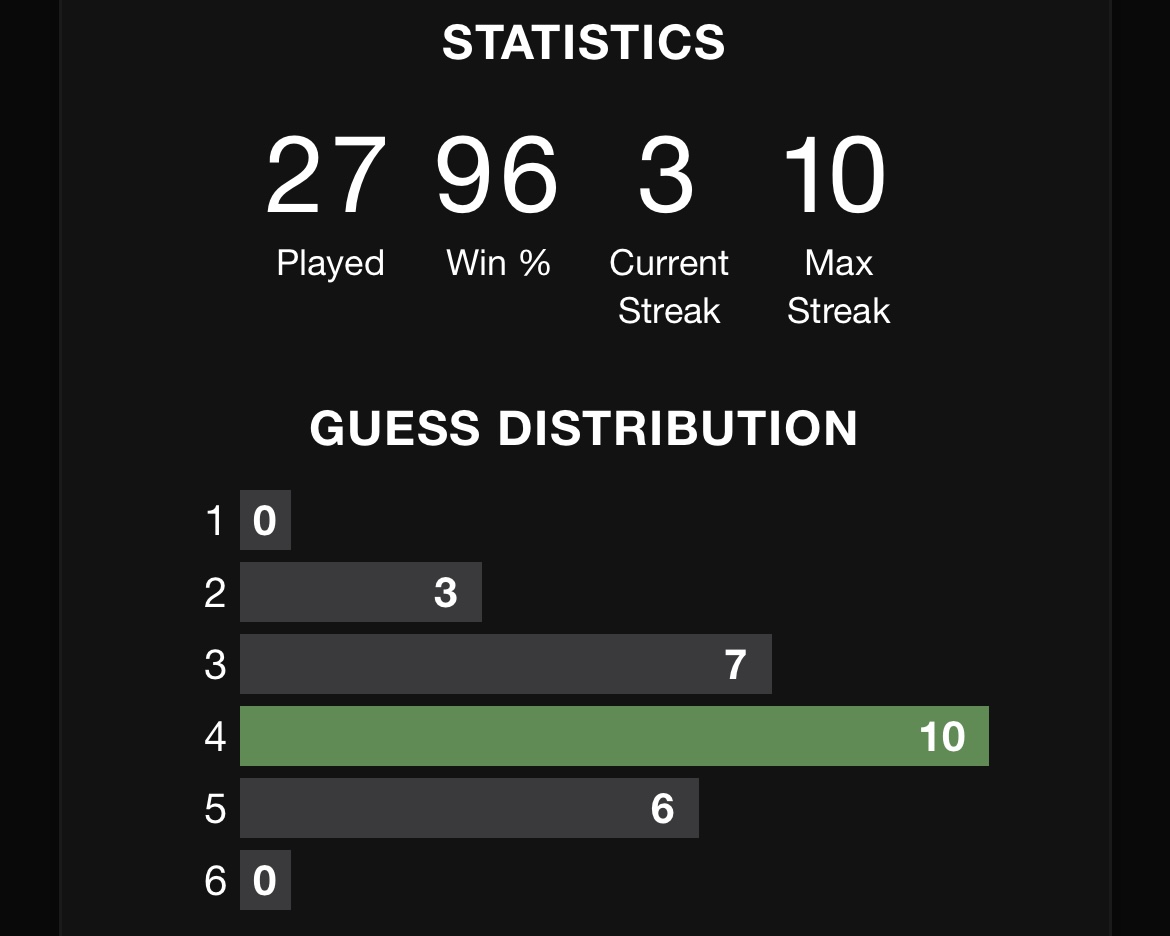

my bell curve is leaning. Wordle 246 4/6*

🟨⬛🟨⬛⬛

⬛🟩⬛🟩⬛

⬛🟩⬛🟩🟨

🟩🟩🟩🟩🟩

(original)

Replying to @Automatid

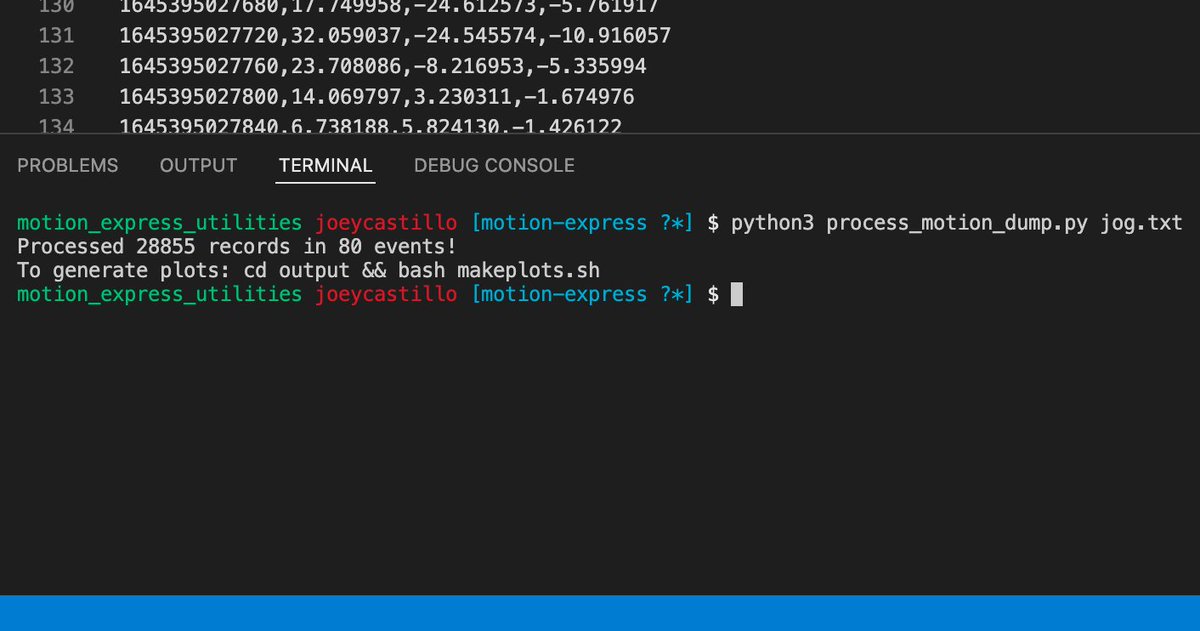

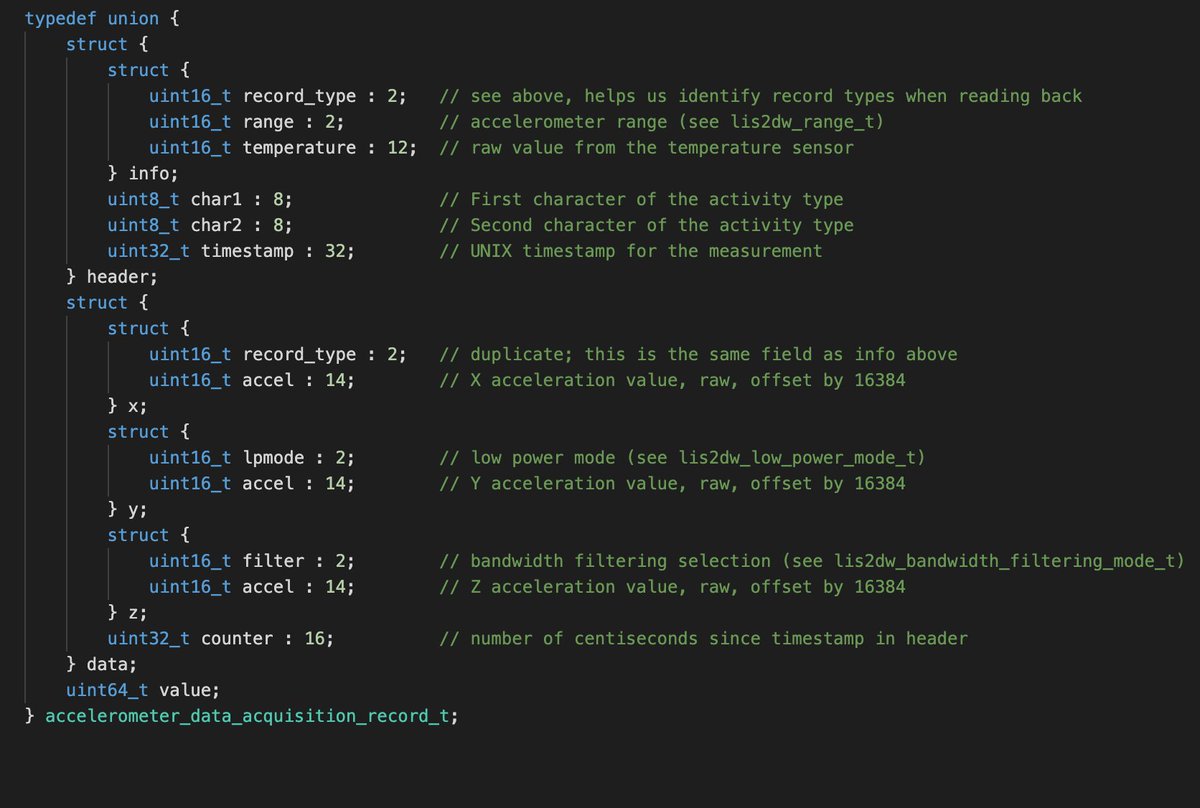

the raw reading is 14 bits, but if we did the math and turned them into floats, each would take 4 bytes × 3 axes, plus 2 bytes for the counter and some more for parameters; now each reading is 16 bytes. Bit packing the raw values lets us fit a full data point in just 8 bytes.

(original)

Replying to @Automatid

Mostly, it lets us fit a lot of data into a little space; for example, rather than using a 16-bit short to store a 12-bit temperature, we can also fit a 2-bit record type and 2 bits for an accelerometer parameter in the extra bits. Same with the 14-bit accelerometer readings…

(original)

Replying to @josecastillo

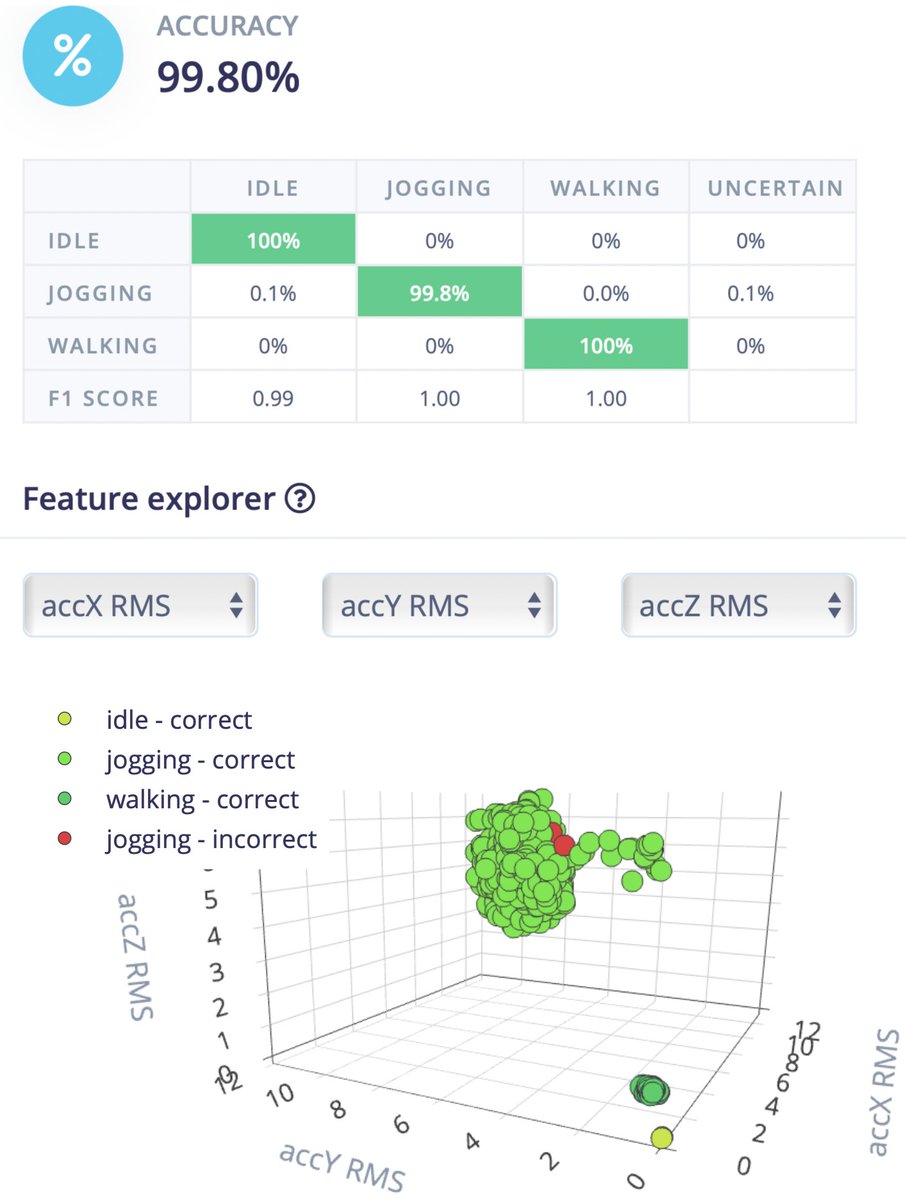

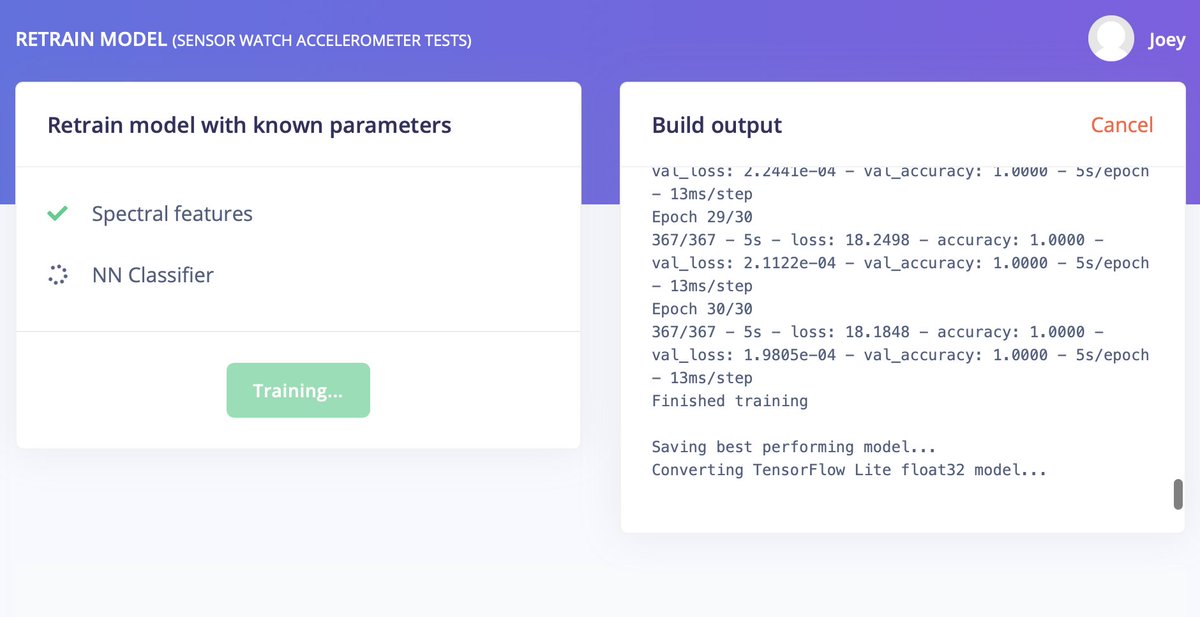

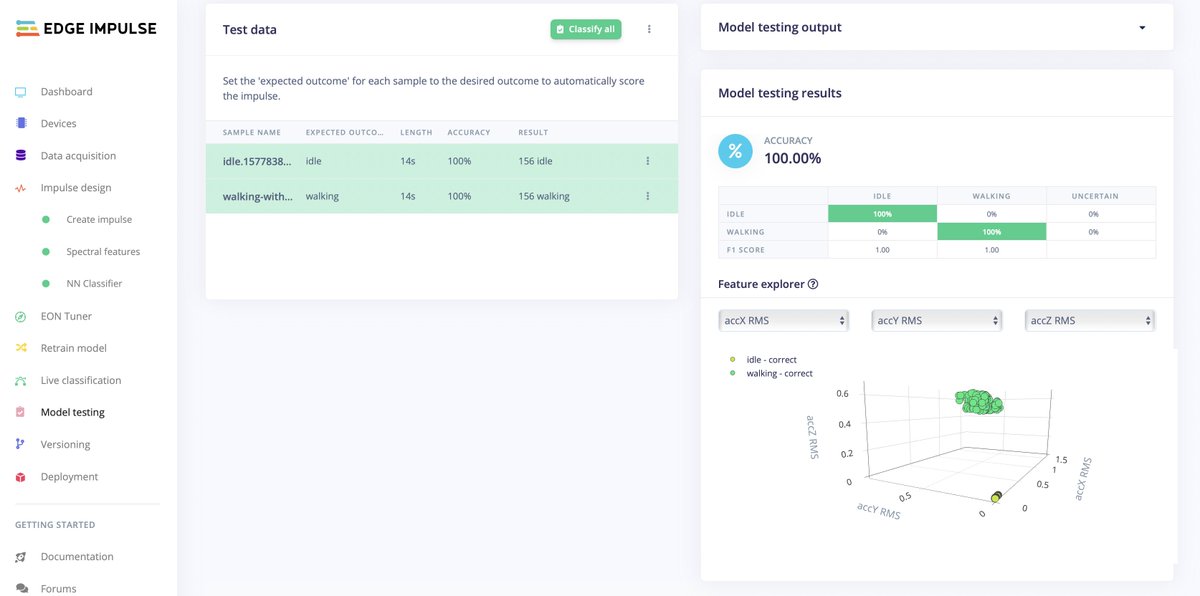

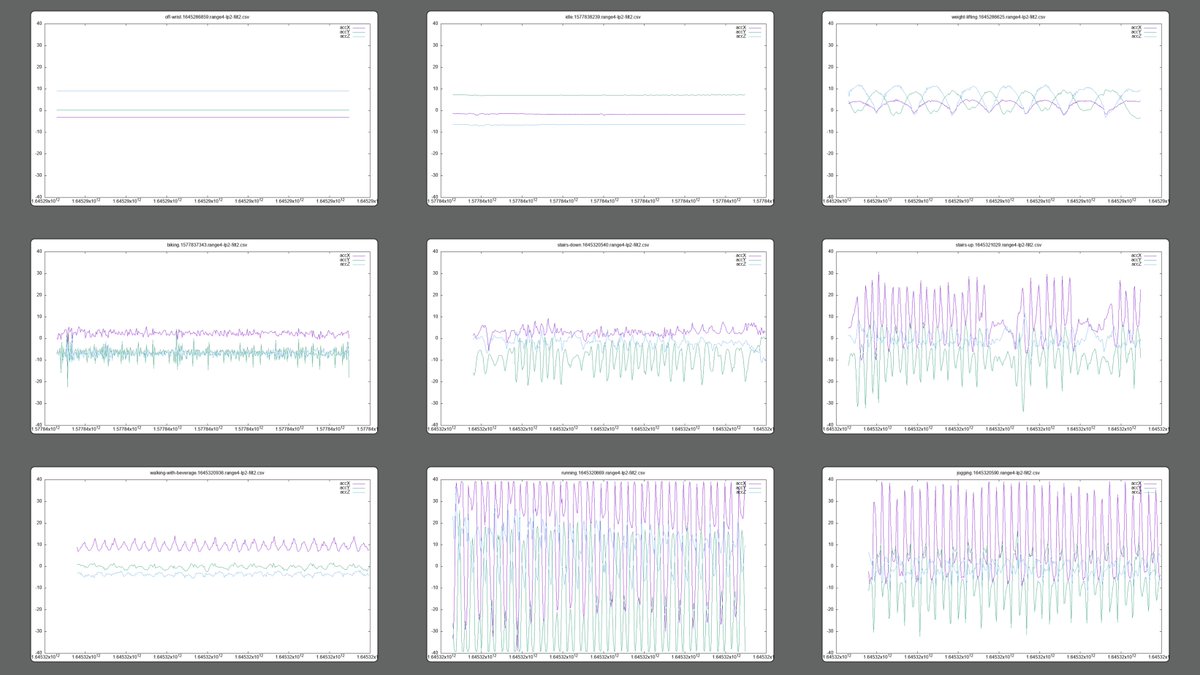

after trimming some of the obviously incorrect bits (and splitting that one sample where I stopped into two shorter ones where I didn’t), this is looking much better. Where it’ll get interesting is when I add one more category. A thing to do tomorrow.

(original)

Replying to @femtoduino

thank you!!

(original)

Replying to @josecastillo

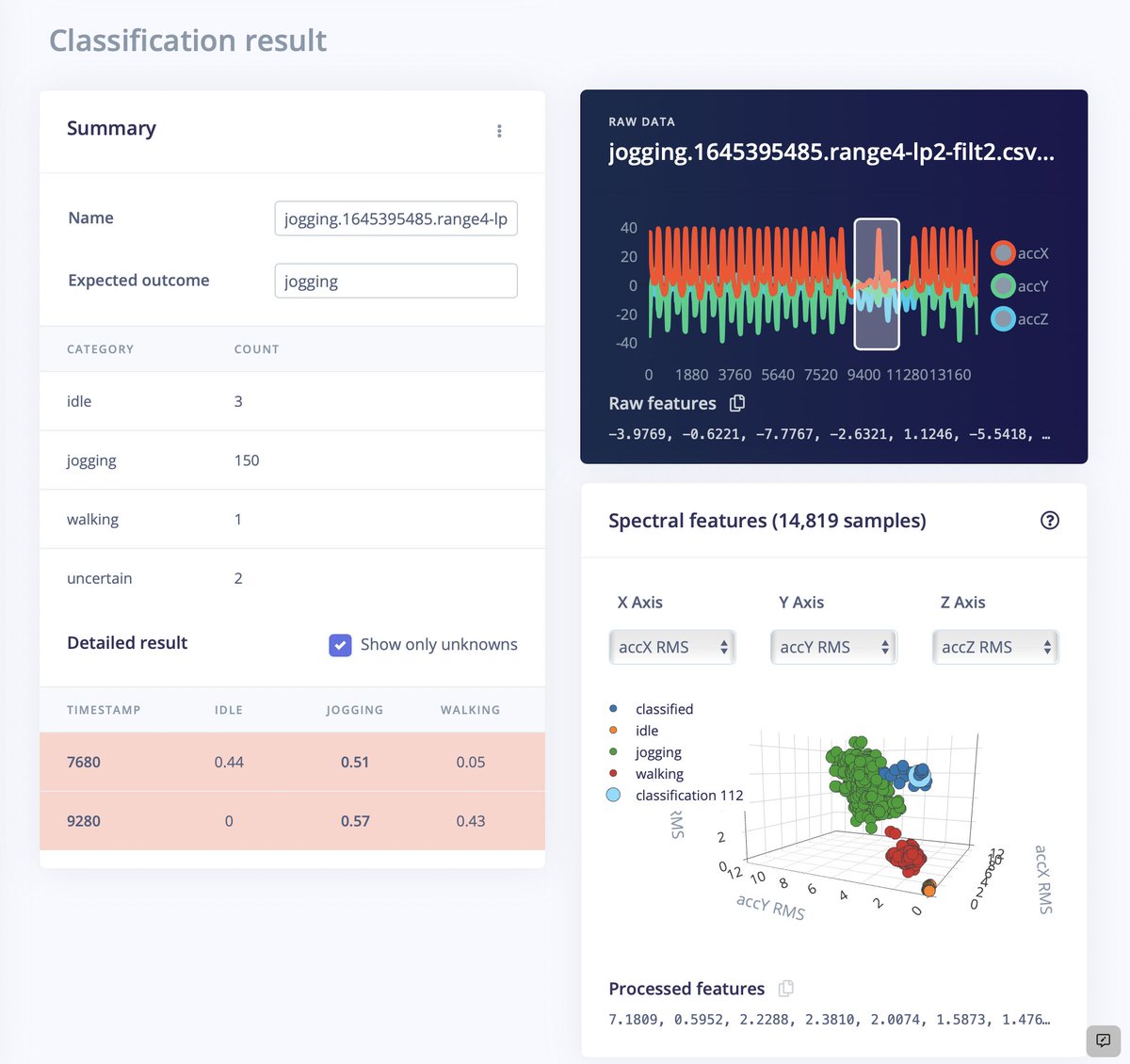

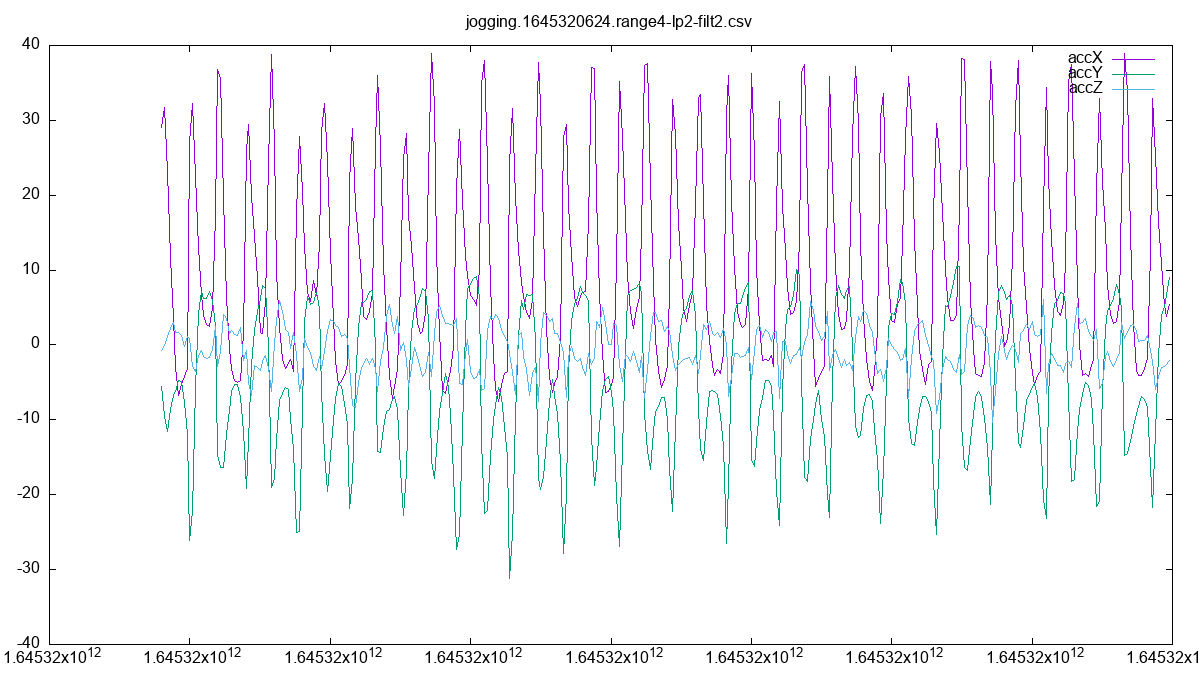

actually with some cleanup, this can probably improve further; I’m seeing some of my training data included bits where I was pressing the button to start motion capture, and hadn’t quire resumed jogging. a little QA on the data could improve things.

(original)

Replying to @josecastillo

And actually this might explain it: here’s the failed test, and it’s a sample where I must have stopped briefly and then resumed running. It gave the model pause, I suppose.

(original)

Replying to @josecastillo

Not bad for a 30 minute run! The tool split my jogging data into two separate sets, one for training and one for testing; running the testing data against the trained model, it’s 99.8% accurate for jogging.

(original)

Replying to @josecastillo

(original)

Replying to @josecastillo

(original)

Replying to @josecastillo

(original)

Replying to @josecastillo

(original)

Replying to @josecastillo

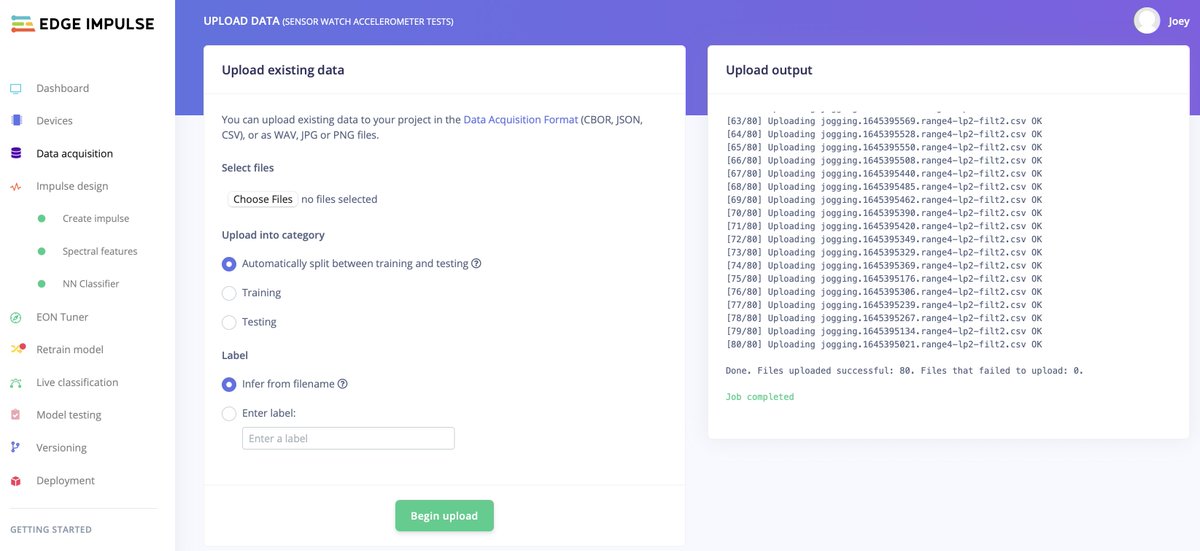

little over 30 minutes; decided to keep jogging until I filled 50% of my flash chip. Now to unwrap it and see what we got! Think I’m going to speed-run adding a class to my @EdgeImpulse model.

(original)

Replying to @josecastillo

it seems I’ve got plenty of walking and idle data; I figure the easiest class to add is jogging. 30 minute run, three 15-second samples a minute, and as a bonus I’ll technically be getting healthier the whole time. It’s also so nice out! If you can get past the whole 1° C thing…

(original)

Replying to @new299 and @EdgeImpulse

I also think it’ll be interesting (read: necessary) to get the watch on some other wrists for training and testing. Gait aside, I bet the acceleration profile looks v different on someone a foot taller who’s all arms :)

(original)

Replying to @new299 and @EdgeImpulse

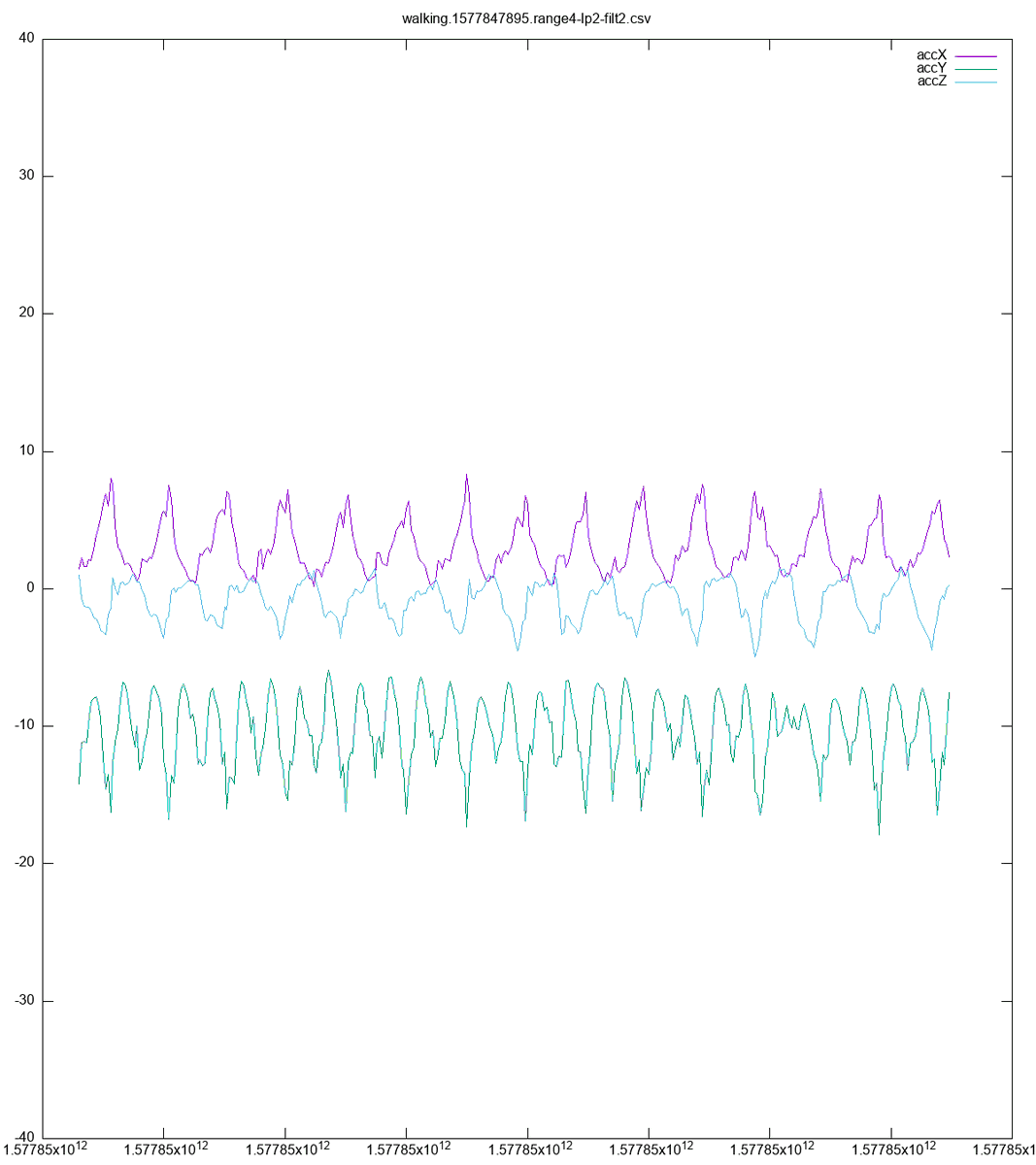

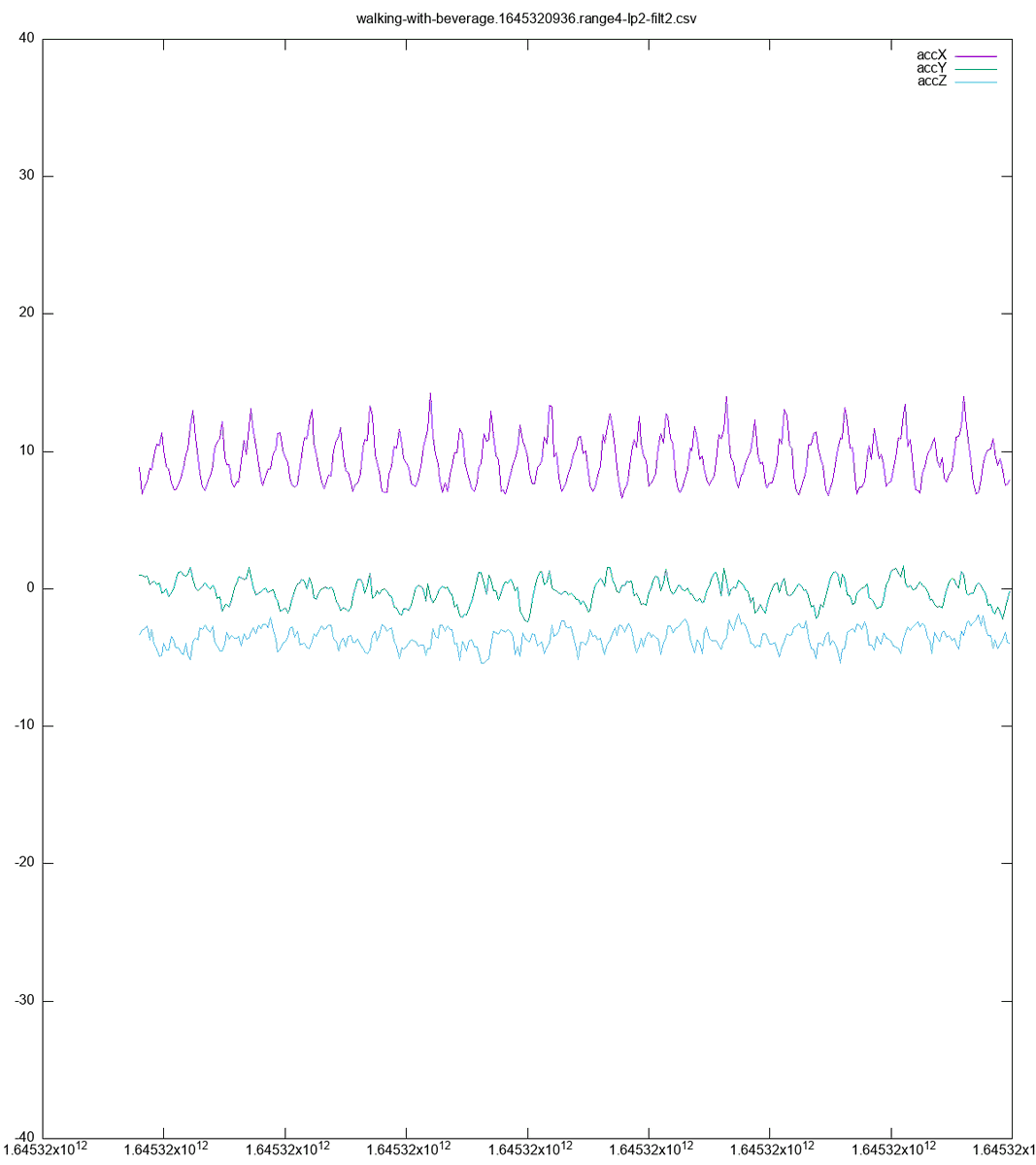

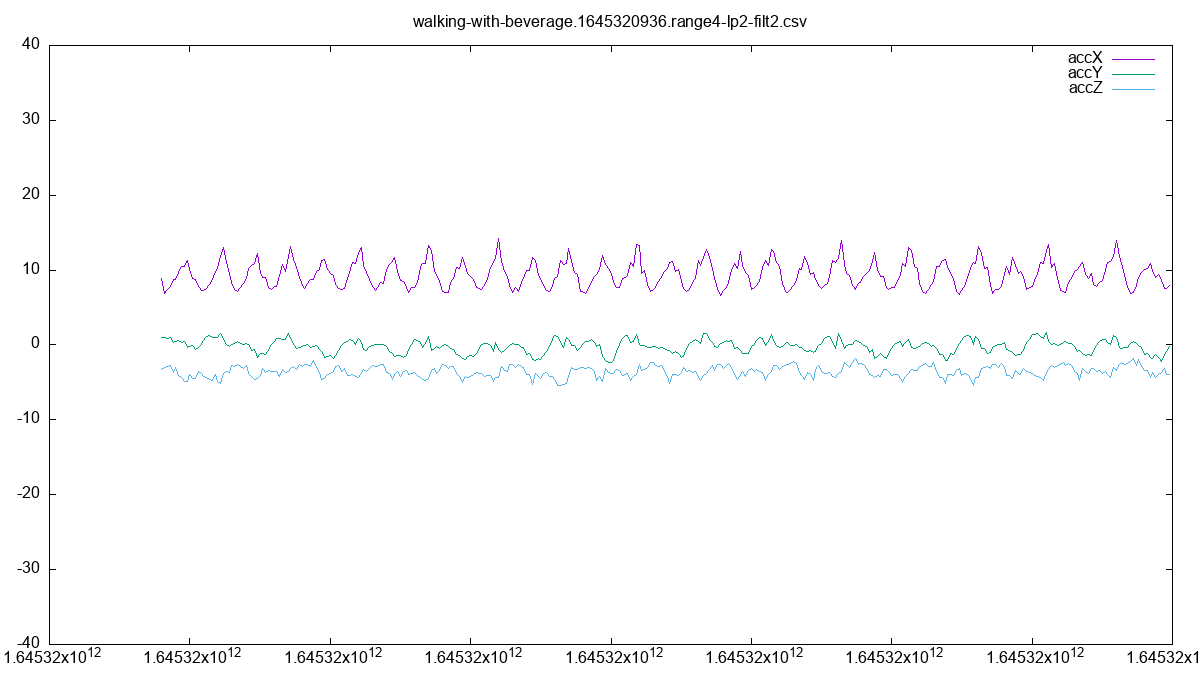

But yea, watch on the hand with the coffee, the raw values do look very different in absolute terms, but I can see the similarities over time. The model is using spectral analysis; it makes sense that it would see the similarities too!

(original)

Replying to @new299

Funny thing, I had already captured a lot of walking data previously and ingested it into @EdgeImpulse; I only did the “walking with beverage” thing as a test to see if the classifier would still recognize it (which it did)! Next thing though is to add more classes to the model.

(original)

Replying to @josecastillo

the data structure I came up with for this is a bit… “extra”. Bitpacked beyond belief. But it does let me handle capturing data using different ranges and modes, and still leaves room for a two-character code for activity type that I can easily map to a filename when ingesting.

(original)

Replying to @josecastillo

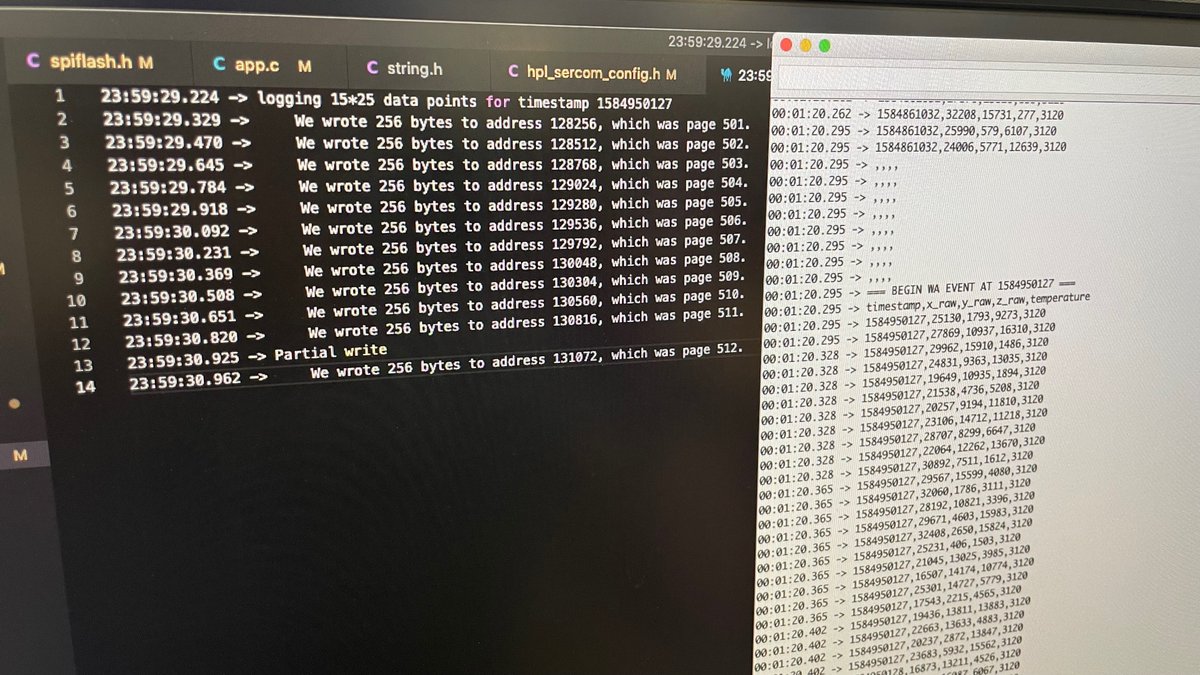

disassembling the watch and dumping via USB serial is almost certainly not the most efficient way to get motion data off the watch, but hey: there’s good and then there’s good enough. (it also looks cool)

(original)

Replying to @nebelgrau77

Earlier I was just counting number of interrupts when any axis exceeded (I think?) 2g, and found that it was pretty easy to tell when exercise was happening and when it wasn’t. This new motion data should help me refine this. https://mobile.twitter.com/josecastillo/status/1466410517082296321

(original)

Replying to @nebelgrau77

Ya that’s another reason for these tests: not just machine learning but my actual learning. I sense that anomaly detection could be an orientation change or an interrupt over a threshold to trigger classification, but I need to get a sense for what normal motion looks like first.

(original)

Replying to @rbaron_

Thanks for the kind words!!

(original)

Replying to @josecastillo

I also wrapped up the “repeat” feature on the data acquisition face, which let me track last night’s sleep all night long. These are just 15-second clips recorded every 10 minutes, but now I’m curious if I can interrupt on orientation changes as a “tossing and turning” detector.

(original)

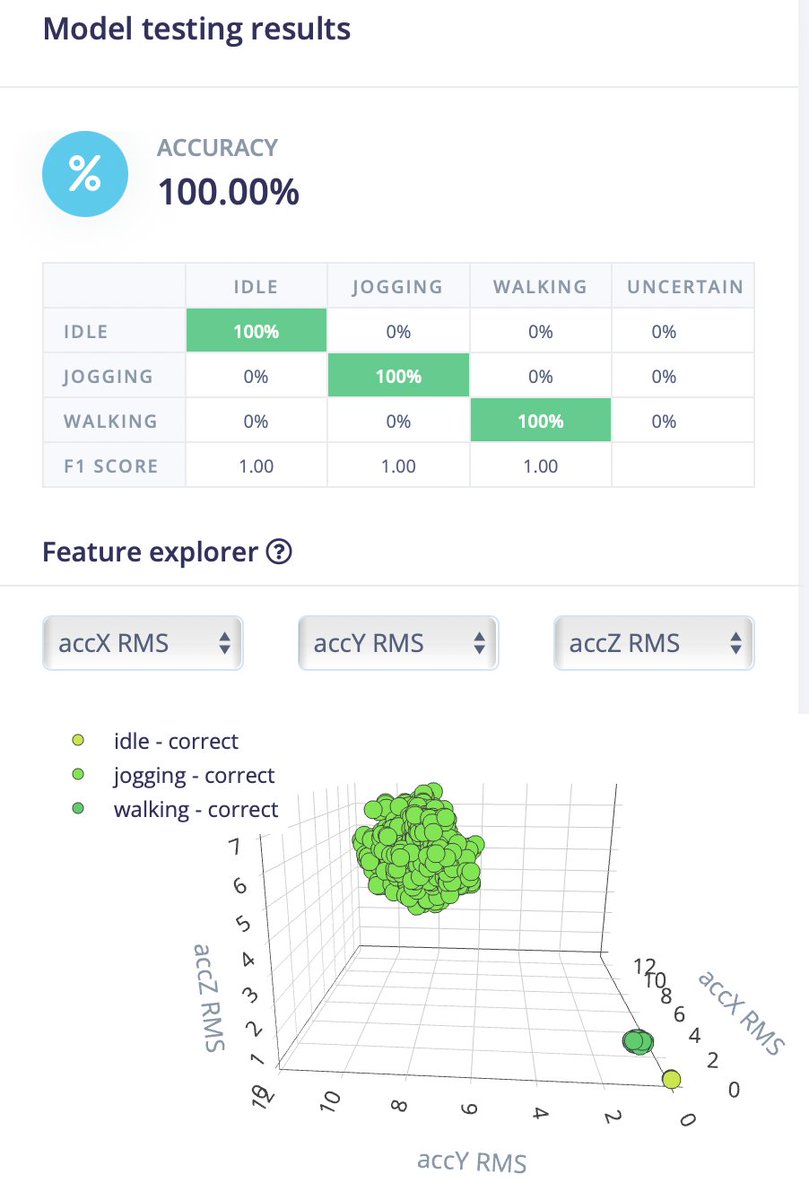

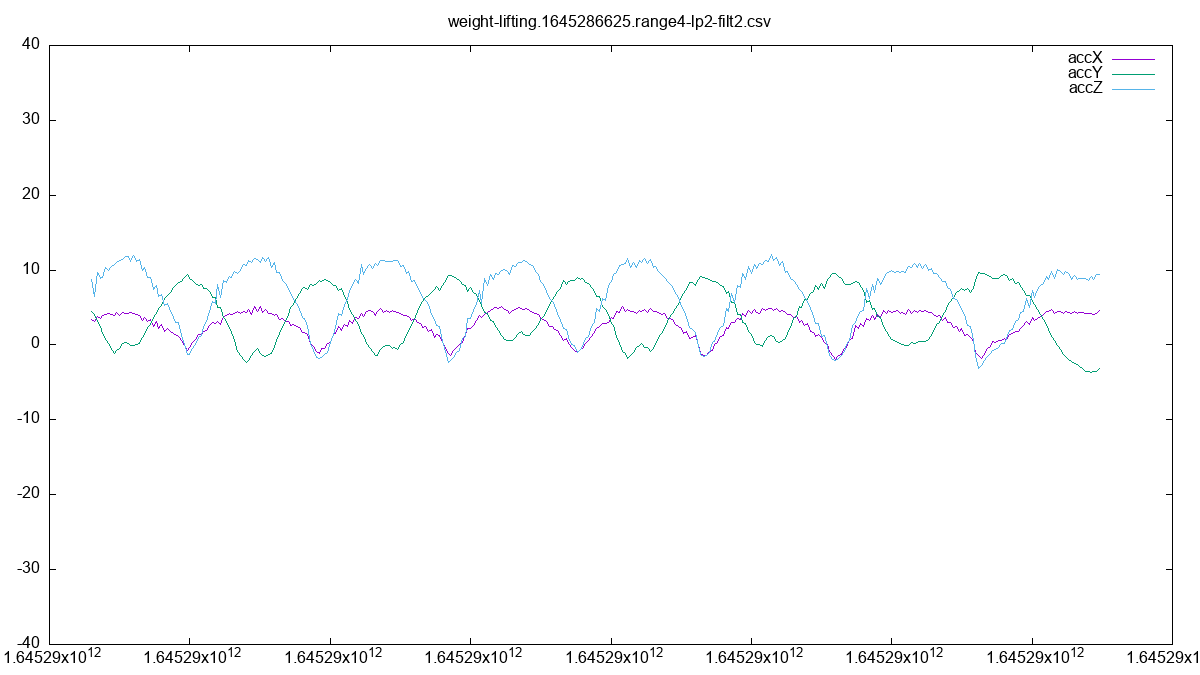

HOT DAMN, that’s some good looking data! #SensorWatch

(original)

Replying to @josecastillo

hard mode, amirite? Wordle 245 5/6*

⬛⬛🟩🟨⬛

⬛⬛🟩🟩🟨

🟩⬛🟩🟩🟩

🟩⬛🟩🟩🟩

🟩🟩🟩🟩🟩(original)

Replying to @nebelgrau77 and @EdgeImpulse

Right now activity recognition, but I’m just getting started :)

(original)

Replying to @nebelgrau77 and @Developer122

For now I’m just planning to store it on a chip; the goal is to record several dozen 15-second samples of different kinds of motion, and then export it as CSV to feed to @EdgeImpulse. Then we make a classifier, and then (hopefully!) we’ll be able to do on-device machine learning!

(original)

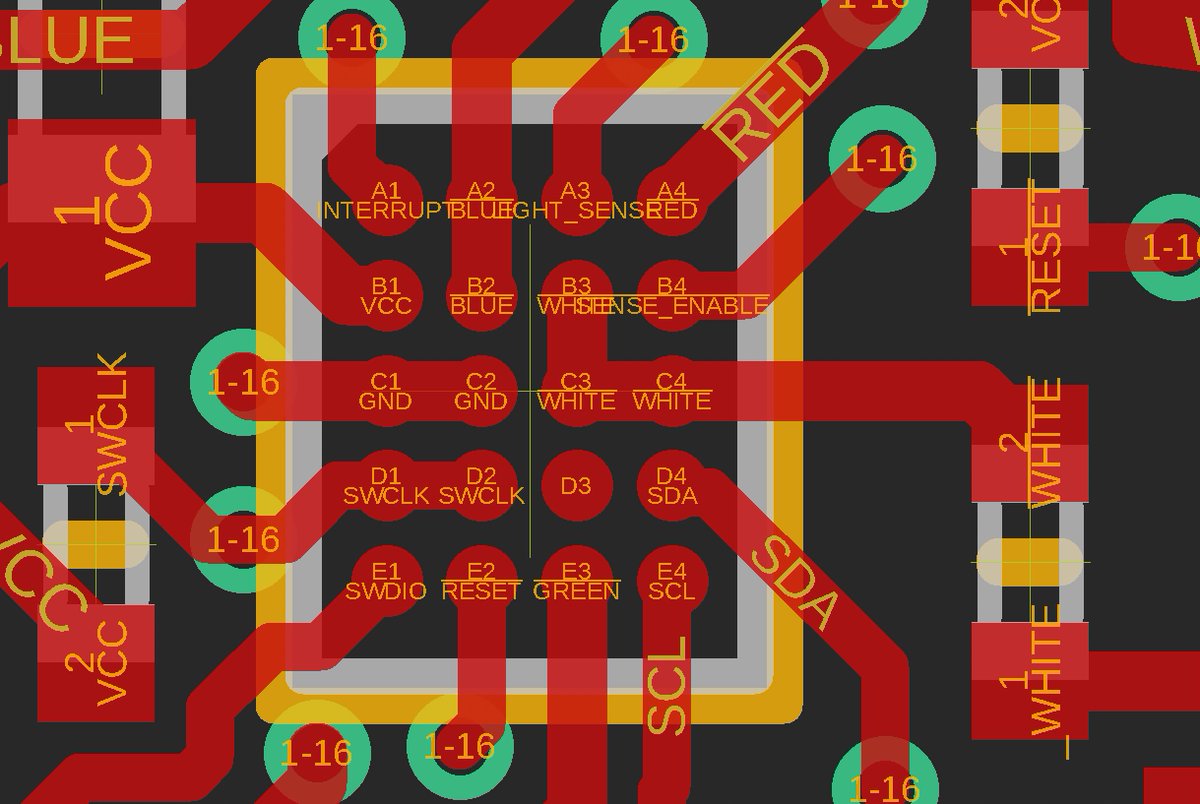

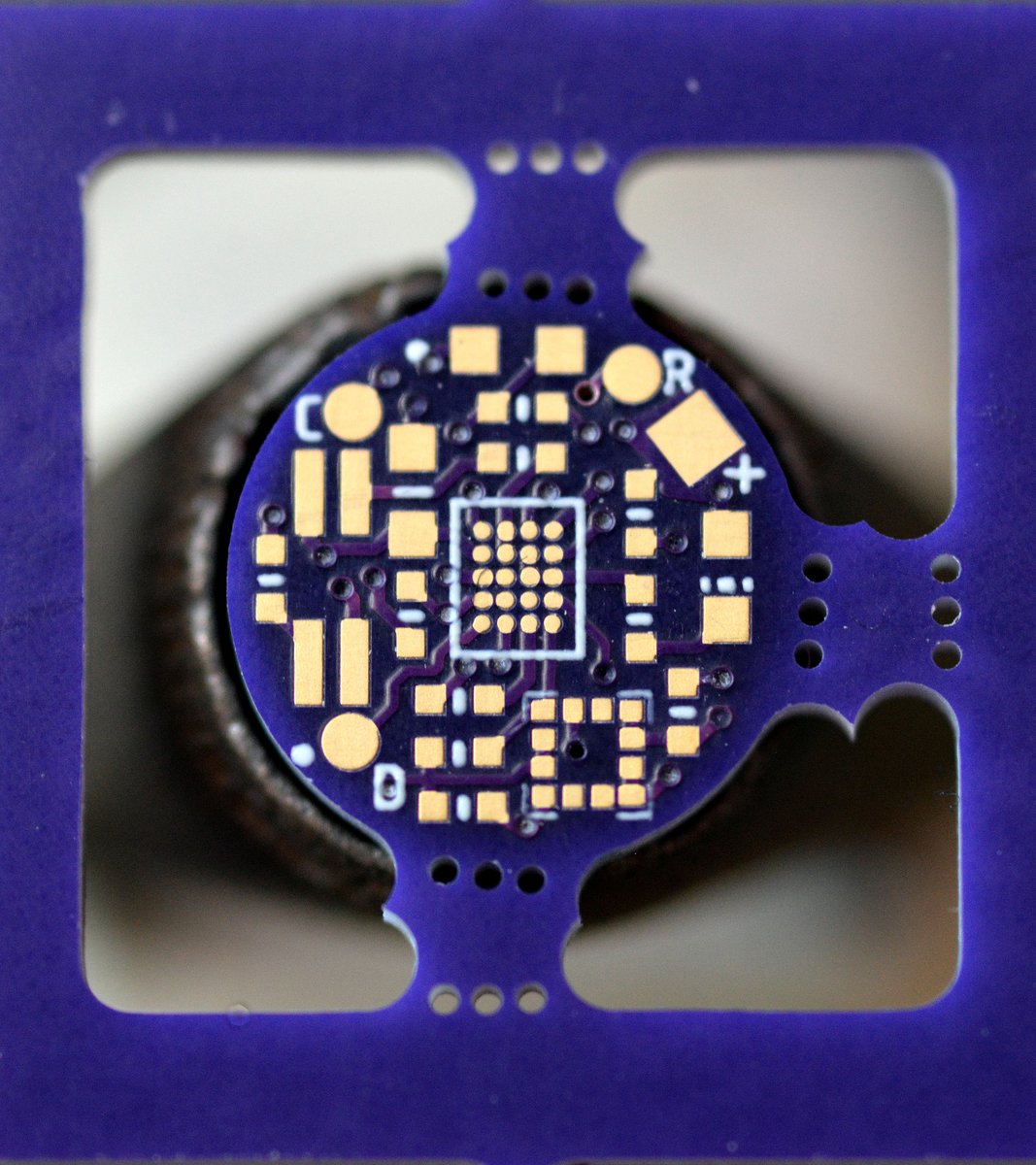

Replying to @DurandA23, @oshpark and @MicrochipMakes

Yea, no microvias with this process, so I routed them through some outer pads. In the case of GND and SWCLK, I just can’t use pins C1 and D1; in the case of BLUE and WHITE, I can actually drive a couple of pins with the same PWM peripheral to deliver more current to those LED’s.

(original)

Replying to @Josh_McGee_G, @oshpark and @MicrochipMakes

I’m using the SAM D10 for this one! Little sibling to the SAM D21 that’s ever so popular among makers and hackers. They even make a version of it (SAM D11) with native USB! https://www.microchip.com/en-us/product/ATSAMD10D14 https://www.microchip.com/en-us/product/ATSAMD11D14

(original)

Replying to @nebelgrau77 and @Developer122

Yeah, it’s a 32-level FIFO so filling at 25 Hz and emptying it every second, it never gets full. The clocks definitely aren’t synced — sometimes I get 25 data points, sometimes 26 — but in the end I still get all the data that was logged over the desired period.

(original)

Replying to @josecastillo

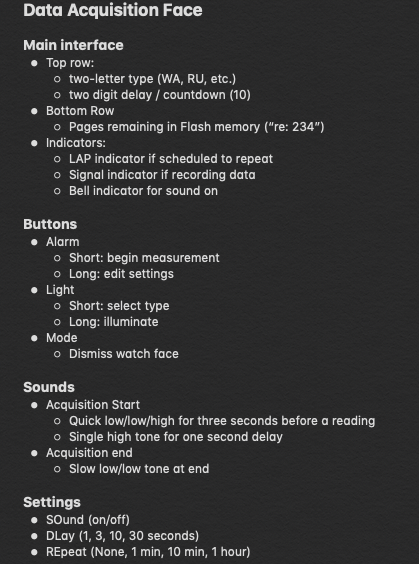

I’m also using the “signal” segment on the screen to indicate when data capture is active, but auditory feedback is better here. Also using the big main line to display the percentage of Flash storage remaining. The % sign is a little weird without the slash but I think it works!

(original)

may I never tire of bleeps and bloops! So far this is just the UI, but in short: you select the kind of motion event you want to capture (WH=Wash Hands); it counts down to the start of measurement (beep!), records 15 seconds of motion data and then signals completion (boop boop).

(original)

Replying to @josecastillo

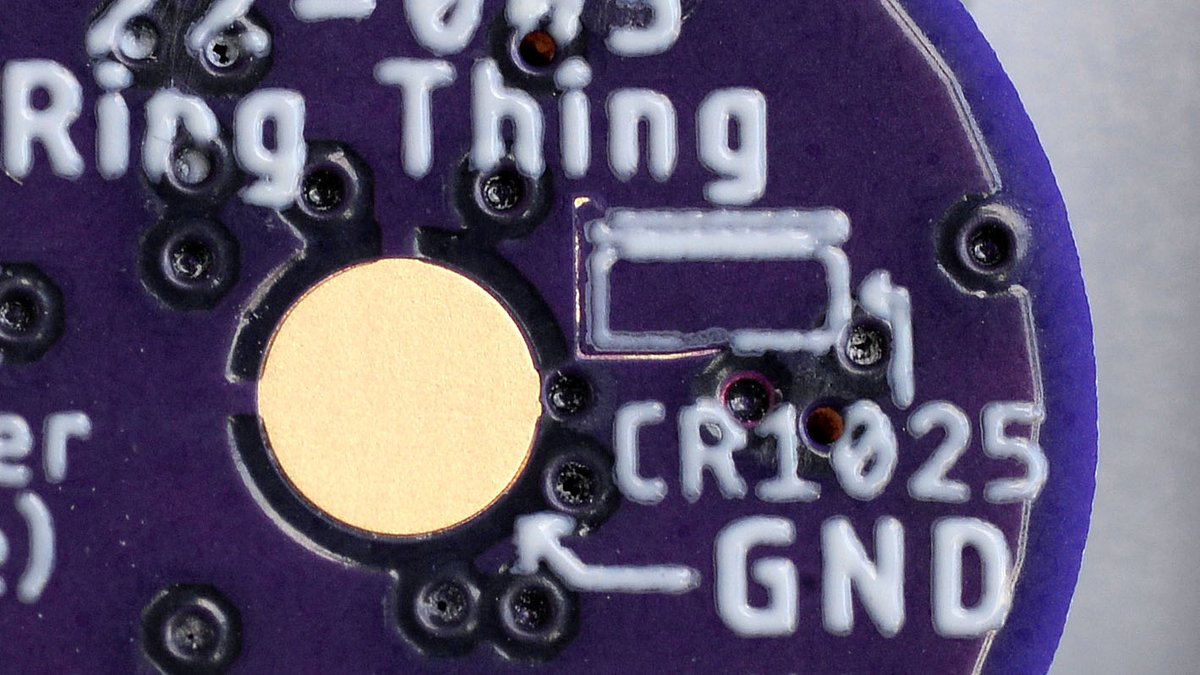

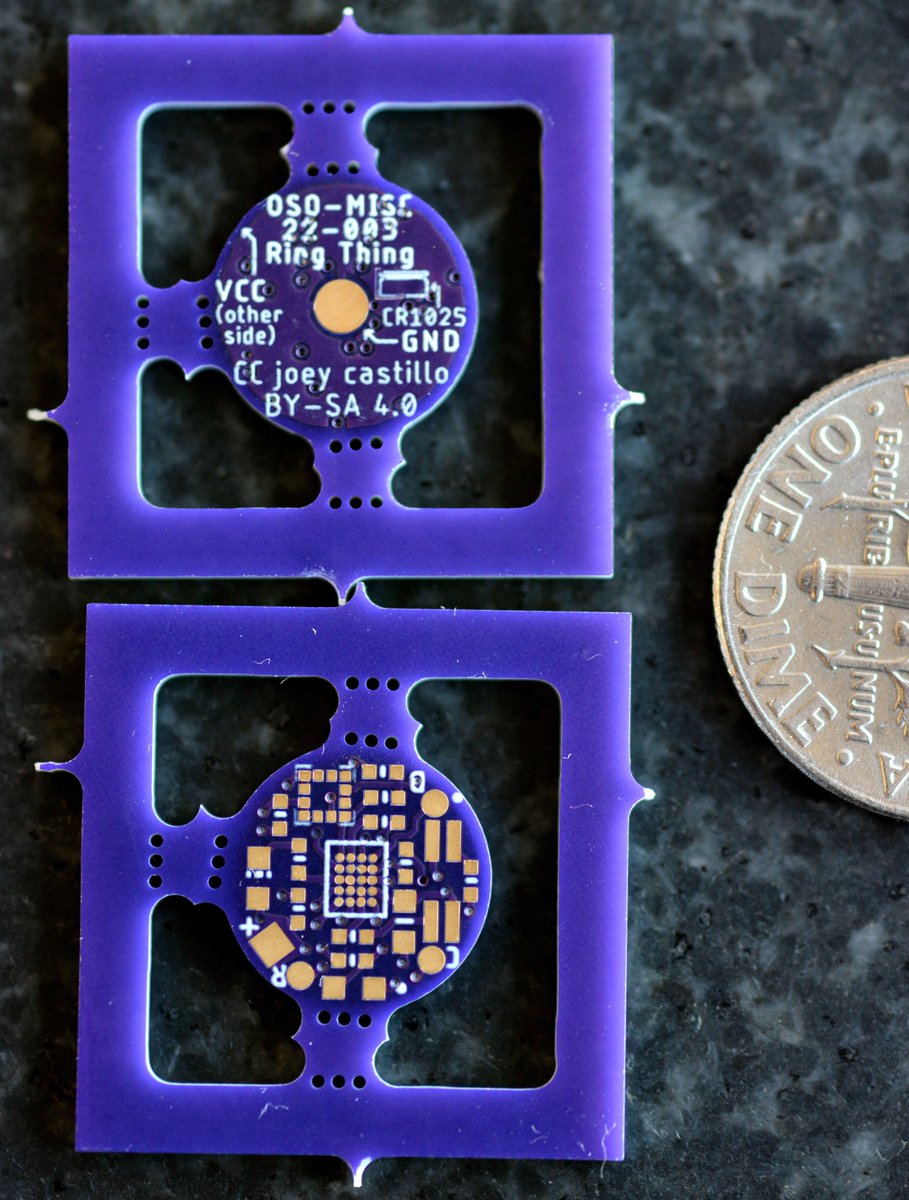

final note and then it’s back to work: it’s at the limits of both the process and the resolving power of my macro lens, but I love self-documenting PCBs, and I love that this tiny 2 mm × 1 mm assembly instructions diagram rendered so nicely. (that’s a coin cell and a metal clip!)

(original)

Replying to @josecastillo

Also: what an incredible time to be making things. For under $10 (with free shipping!), you can just design a six-layer board on a lark and have it in hand two weeks later? @oshpark: 🙌🏽

(original)

also omg the boards for the ring thing came in and THEY ARE SO TINY. This is a 6-layer board that sports a microcontroller, accelerometer, light sensor, RGB+W LED’s, OH AND IT’S 9.8 MILLIMETERS ACROSS! Now who @ Microchip do I gotta slide $20 to get some WLCSP SAM D10 samples? 😛

(original)

RT @crowd_supply: It’s your last chance to support Sensor Watch, a hackable ARM Cortex M0+ upgrade for the classic Casio wristwatch from @j…

(original)

Replying to @CreeperWShades, @oshpark and @OSHStencils

I want a list of “off” signals for every television on earth. A wrist-mounted anti-TV cannon!

(original)

Replying to @nebelgrau77

Just curious if you can share, which accelerometer? I had been using the LIS2DH, but moved to the LIS2DW because it seemed more featureful and easier to place. Always interested in what folks are using!

(original)

Replying to @nebelgrau77

So true; I’m realizing how much I was only scratching the surface in past projects!

(original)

Replying to @josecastillo

anyway. that watch face is my task today. Goal is to get it done before I go on a hiking trip next week so I can capture all kinds of juicy motion data. Made some UX notes last night; it’s wild how much stuff you can stuff into a two-button interface.

(original)

Replying to @nebelgrau77

I can use the interrupts, but in this case I don’t have to! Since I’m capturing data at 25 Hz and I have an internal 1 Hz tick from the RTC, I can capture data every second and never risk an overrun. But I did connect the interrupt pin in case it becomes useful for other cases :)

(original)

Replying to @josecastillo

This is cool because instead of having to wake the CPU 25x a second (or worse, just running it in IDLE at 350 µA), the CPU only has to wake up once a second — yet it still gets a full sense of everything that happened in that second! This is how we’re going to log training data.

(original)

Yesterday I implemented the FIFO queue on our LIS2DW accelerometer, and I have to say it looks pretty rad! Most serial plots stream data in real time. This is streaming as a batch transfer: the accelerometer stores 25 data points for us, then we burst them out once a second. 😎

(original)

Replying to @CreeperWShades, @oshpark and @OSHStencils

Should be possible! IMO the best way to do it would be to reverse-mount the LED in a small cutout like this. The design file for this board is “OSO-MISC-21-015 Temperature and Light Board” here: https://github.com/joeycastillo/Sensor-Watch/tree/main/PCB/Sensor%20Boards https://twitter.com/josecastillo/status/1478386879150047240

(original)

Replying to @josecastillo

five is the new four. Wordle 244 5/6*

⬛⬛⬛⬛🟩

⬛⬛⬛⬛🟩

⬛⬛🟨⬛🟩

⬛🟩🟩🟩🟩

🟩🟩🟩🟩🟩(original)

Replying to @josecastillo

aw man, and I missed yesterday’s wordle!

(original)

Replying to @josecastillo

well the code is a mess and I’m just getting out of the shop at midnight, but I’ll be damned if that’s not logging data to Flash and dumping that data back out as a CSV. It’s all random numbers and simulated timestamps right now, but tomorrow. Tomorrow the magic happens.

(original)

Replying to @bencpye

ooh, that motion has got to make for an interesting graph!

(original)

Replying to @josecastillo

idea is we log these kinds of evemts and train the ML model to recognize them. Off top of head I have:

ID - idle, not doing anything

SL - sleeping

WA - walking

JO - jogging

RU - running

HI - hiking

CL - climbing

BI - biking

EL - elliptical

How else do we move? what am I missing?(original)

Replying to @josecastillo

ok I’m staying late. There are three tracks to this: the accelerometer streaming (done), the flash logging (in progress) and the UX/UI. I think at first the goal will be a watch face to manually log events of a given type, and I think that type needs to be two characters long…

(original)